AI Prompts for Smarter Data Science & Analysis

If you’re still crafting generic prompts like “analyze my data” or “help me with statistics,” you’re basically not fully utilising the potential. We can do better than that, especially when you’re working with notebooks as powerful as Livedocs.

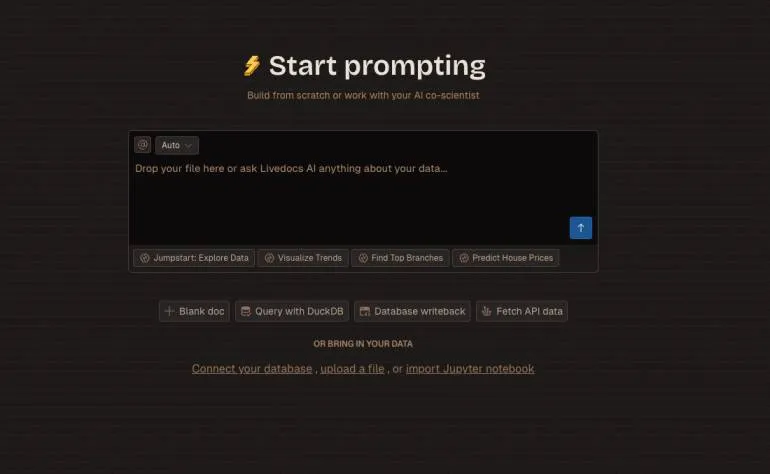

Livedocs is a collborative, no-code AI notebook for data scientists and machine learning engineers. Soon, we will be launching Agent 2.0, where you will experience a more powerful agent that can automatically handle, analyze, and present your data notebooks, all with no code!

Join Livedocs now to stay update.

—

The Prompts That Would Save You Time

Data Quality Checking

Here is what I use:

I'm working with [dataset type] containing [brief description].

Create a comprehensive data quality audit that includes:

1. Missing value patterns with business impact assessment

2. Outlier detection using domain-specific thresholds for [your industry]

3. Data consistency checks across related fields

4. Temporal anomalies if time-series data exists

5. Recommendations ranked by potential revenue/risk impact

Context: This data feeds into [specific business decision/model]

Timeline: Need actionable insights within [timeframe]

Risk tolerance: [conservative/moderate/aggressive]

Pro tip

Always include the downstream impact. When you tell the AI that missing customer data affects churn prediction models, it’ll prioritize those quality checks differently than if you’re just doing exploratory analysis. The context changes everything.

—

Writing SQL Query

Most people ask for SQL help like this: “Write me a query.”, this is not informative enough for the agent to understand.

Try this instead:

Generate an optimized SQL query that answers: [specific business question]

Database context:

- Schema: [provide key tables and relationships]

- Data volume: [approximate row counts]

- Performance constraints: [query timeout limits]

- Business rules: [any data filtering requirements]

Output requirements:

- Aggregation level: [daily/weekly/monthly/customer-level]

- Key metrics: [specific calculations needed]

- Format: Ready for visualization in [chart type]

Also provide:

- Query execution plan explanation

- Alternative approaches for better performance

- Data validation checks to include"

Pro tip

Mention your visualization end goal. Livedocs excels at turning data into visual stories that are interactive and ready to share, so when you specify “format for time-series chart,” the query structure becomes more purposeful.

—

Design a Statistical Test

This one’s a lifesaver when you’re drowning in statistical options:

Help me design the statistical analysis for this scenario:

Research question: [exact question you're answering]

Data characteristics:

- Sample size: [actual numbers]

- Variable types: [continuous/categorical breakdown]

- Distribution assumptions: [what you've observed]

- Potential confounders: [business context factors]

Constraints:

- Business significance threshold: [not just p < 0.05]

- Practical effect size requirements: [minimum meaningful difference]

- Time/computational limits: [if any]

Provide:

- Test selection with detailed rationale

- Assumption validation approach

- Interpretation guidelines for business stakeholders

- Code implementation for Livedocs environment

Pro tip

Don’t just mention sample size, include the story behind your data collection. If you’re dealing with seasonal data, customer segments, or any natural groupings, that context completely changes which tests make sense.

—

For Feature Engineering

This is where things get interesting. Most feature engineering advice is generic, but your data isn’t:

Design a feature engineering pipeline for [specific ML problem]:

Dataset context:

- Industry: [your domain]

- Prediction target: [specific business outcome]

- Current feature count: [baseline]

- Performance benchmark: [current model metrics]

Generate:

- Domain-specific transformations relevant to [industry patterns]

- Time-based features considering [business cycles/seasonality]

- Interaction features with statistical significance testing

- Feature selection strategy accounting for [model interpretability needs]

- Validation approach preventing data leakage

Implementation requirements:

- Compatible with [specific ML framework]

- Scalable to [expected data volume]

- Maintainable for [team skill level]

Pro tip

The magic happens when you specify your business domain upfront. Financial data needs different feature engineering than e-commerce data. Livedocs’ continuous sync technology keeps your data up-to-date, so mention if you’re dealing with real-time features versus batch processing.

—

For Model Diagnostic

When your model isn’t performing, don’t just ask “what’s wrong?”. Try this:

Diagnose my model performance issues:

Model details:

- Type: [algorithm specifics]

- Current performance: [actual metrics]

- Expected performance: [business requirements]

- Training data timeframe: [temporal scope]

Symptoms:

- Performance degradation pattern: [when/where it fails]

- Error distribution: [which segments suffer most]

- Feature behavior: [any suspicious patterns]

Business context:

- Cost of false positives vs false negatives: [actual ratio]

- Model refresh frequency: [operational constraints]

- Interpretability requirements: [regulatory/business needs]

Provide:

- Bias-variance analysis with visual diagnostics

- Feature stability assessment

- Data drift detection recommendations

- Actionable fixes ranked by expected impact

Pro tip

Always include your error cost structure. If false positives cost your business 10x more than false negatives, that completely changes the diagnostic priorities and recommended fixes.

—

Translating to Non Technical Stakeholder

Here’s something most technical people struggle with—translating results for non-technical stakeholders. This prompt has saved my relationships with countless business partners:

Transform this technical analysis into stakeholder communication for [specific audience]:

Analysis: [attach your Livedocs results]

Audience: [C-suite/product managers/operations team]

Decision context: [what they're trying to decide]

Timeline: [when they need to act]

Create:

- Executive summary with quantified business impact

- Visual story recommendations using Livedocs' interactive features

- Risk assessment in business terms

- Implementation roadmap with resource requirements

- Success metrics and monitoring plan

- Anticipated objections with data-driven responses

Tone: [match their communication style]

Technical depth: [appropriate for audience expertise]

Pro tip

Livedocs enables teams to discuss findings, refine their analysis, and make data-driven decisions faster through collaboration features. Mention the collaborative aspects when you’re presenting to teams that need to work together on implementation.

—

Automation the Repetitive Stuff

Let’s talk about something that’ll really save you time—automating the repetitive stuff:

Create an automated analysis pipeline for [recurring business question]:

Data pipeline:

- Source: [where data comes from]

- Update frequency: [how often it changes]

- Quality checks: [what could go wrong]

- Notification triggers: [when humans need to intervene]

Analysis requirements:

- Standard calculations: [routine metrics]

- Alert conditions: [when to notify stakeholders]

- Visualization refresh: [dashboard updates]

- Report distribution: [who gets what, when]

Error handling:

- Data availability issues

- Quality threshold failures

- Performance degradation

- Stakeholder notification protocols

Pro tip

Livedocs offers blazing-fast Polars and instant DuckDB queries for pure performance, so don’t be afraid to set up complex automation, Livedocs can handle it.

—

Making It Work In Your Workflow

You know what? The best prompt in the world won’t help you if it doesn’t fit into your actual workflow. Here’s how to make these prompts stick:

Start small. Pick one prompt that addresses your biggest time sink—maybe it’s data quality checks that you do every week, or those monthly reports that always take forever. Master that one prompt until it becomes second nature.

Then build your prompt library. I keep mine in a simple document with the business context filled in for my common use cases. It’s like having templates, but for AI assistance.

—

Final Thought:

These prompts work because they give AI the context it needs to be genuinely helpful.

But they require you to think through what you actually need before you ask. That upfront thinking? It’s not extra work, it’s the strategic thinking you should be doing anyway.

The data scientists who consistently deliver faster results aren’t necessarily smarter or more technically skilled. They’re just better at communicating their needs, whether to AI systems, stakeholders, or their own future selves looking at code six months later.

With Livedocs’ collaborative features and powerful analysis capabilities, you’ve got the platform to support sophisticated workflows. Now you’ve got the prompts to match.

Try it out yourself, Livedocs and build your first live notebook in minutes.

—

- 💬 If you have questions or feedback, please email directly at a[at]livedocs[dot]com

- 📣 Take Livedocs for a spin over at livedocs.com/start. Livedocs has a great free plan, with $10 per month of LLM usage on every plan

- 🤝 Say hello to the team on X and LinkedIn

Stay tuned for the next tutorial!

Ready to analyze your data?

Upload your CSV, spreadsheet, or connect to a database. Get charts, metrics, and clear explanations in minutes.

No signup required — start analyzing instantly