Case Study: GPU Market Analysis.

Gone are the days when you could just grab whatever NVIDIA card sat in the middle of the price range and call it a day. Now we’re looking at AI workloads that demand different specs than gaming rigs, content creators who need memory bandwidth that would’ve seemed absurd five years ago, and price points that make you do a double-take.

That’s exactly where this analysis came from. Not some theoretical exercise, but a real need to cut through the noise and figure out: what GPU should different types of users actually buy?

This case studies is made from our analysis using Livedocs, you can access the notebook here

Data Sources: Tom’s Hardware, PassMark, TechPowerUp, AMD, NVIDIA (November 2025)

—

The Problem: Too Many Options.

A mid-sized design studio reaches out, they’re expanding their team, need to spec out five new workstations, and they’re staring at a GPU market that’s frankly overwhelming. RTX 5090s grabbing headlines with their 32GB of VRAM. AMD’s RX 7000 series offering compelling value propositions.

The challenge wasn’t just about specs. Anyone can read a spec sheet.

The real question was: which specs actually matter for your specific use case, and how do you balance performance against cost without either overspending on capabilities you’ll never use or handicapping your workflow with inadequate hardware?

—

Building a Framework That Actually Makes Sense

Identifying the User Profiles

First step? We needed to understand who’s actually buying these things and what they’re doing with them. Not marketing personas based on assumptions, but real usage patterns. We ended up with four core profiles:

AI Developers & Machine Learning Engineers

These folks live in Jupyter notebooks and PyTorch environments. They’re training models, fine-tuning transformers, running inference at scale. Their bottleneck? Almost always VRAM and tensor performance. When your training job crashes because you ran out of memory at 87% completion, you remember that pain.

4K Gamers

The enthusiasts who want their games to look spectacular and run smooth. They’re pushing pixels at 3840×2160, often with ray tracing enabled, and they’re not interested in compromises. For them, raster performance and frame consistency matter more than anything else.

1440p Gamers

This is actually the sweet spot for most gaming. QHD resolution, high refresh rates (we’re talking 144Hz+), and they want excellent value. They’re savvy shoppers who know that diminishing returns hit hard past a certain price point.

3D Artists & Content Creators

The Blender wizards, Maya professionals, video editors working in DaVinci Resolve. They need viewport responsiveness, fast render times, and enough VRAM to handle complex scenes without constantly optimizing poly counts.

Defining What Actually Matters

Each profile cares about different things, right? An ML engineer doesn’t really care about ray tracing performance. A gamer doesn’t need 32GB of VRAM. So we mapped the critical metrics for each:

-

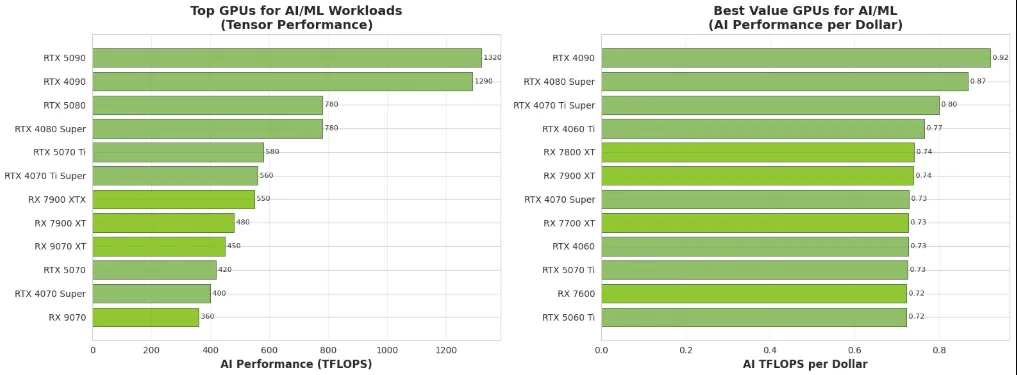

For AI work, we focused on tensor FLOPS (that’s floating-point operations per second, specifically for matrix math), VRAM capacity (because running out mid-training is a workflow killer), and memory bandwidth (how fast data moves to and from that VRAM).

-

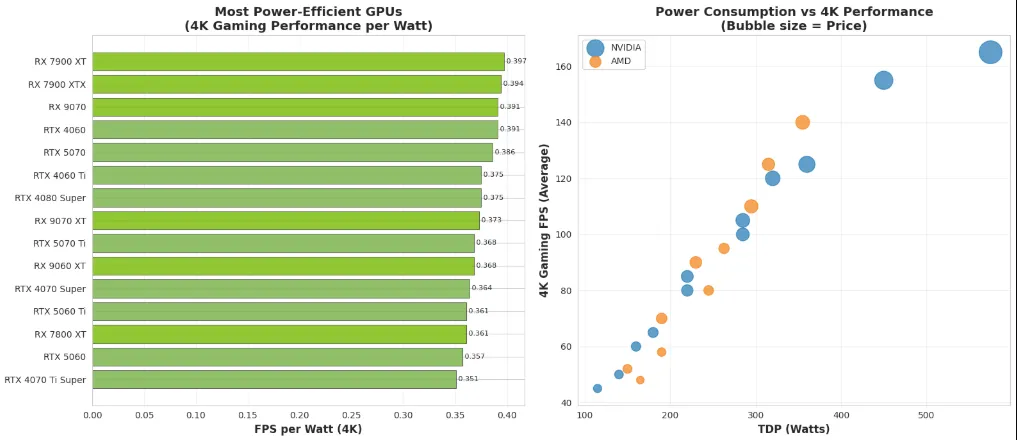

Gaming metrics centered on actual frame rates at target resolutions, ray tracing scores (normalized to 100 for the best card), and VRAM adequacy for modern titles that are increasingly demanding on texture memory.

-

Content creation needed that high VRAM again, plus viewport performance (because nobody wants to work in a sluggish interface), and memory bandwidth for moving large assets around.

The Analysis: What the Data Actually Revealed

Let me walk you through what we found, because some of this was surprising even to us.

The AI Developer Landscape

You can access the notebook here.

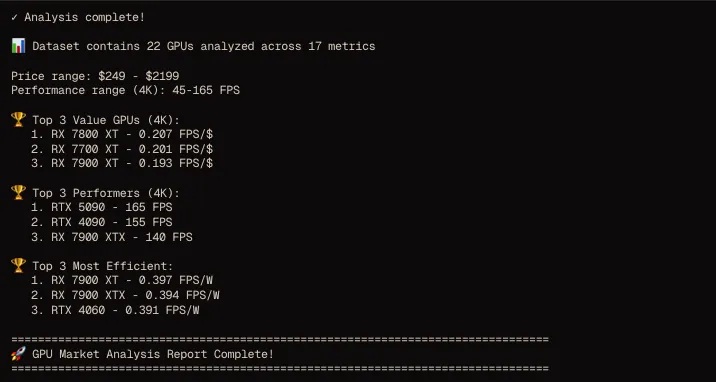

The RTX 5090 dominates the premium tier at $2,199, and honestly? For serious ML work, it’s kind of a no-brainer—if you can afford it. That 32GB of VRAM isn’t marketing fluff; it’s the difference between training larger models locally versus constantly renting cloud GPU time. At 1,320 TFLOPS, you’re looking at exceptional tensor performance.

But here’s what caught our attention: the RTX 4090 at $1,399 delivers 1,290 TFLOPS—that’s 0.92 TFLOPS per dollar compared to the 5090’s 0.60. You’re saving $800 and losing what, maybe 10-15% real-world performance for most tasks? For a small AI startup or a researcher working within grant budgets, that’s a compelling argument.

The budget pick—RTX 5070 at $1099—represents entry into serious ML work. Yeah, 12GB VRAM is limiting for large language models, but for learning, experimentation, and inference workloads, it’s legitimate. Better to have a capable GPU and start training than to wait indefinitely saving for the premium option.

Gaming, Where AMD Makes Its Case

You can access the notebook here.

You can access the notebook here.

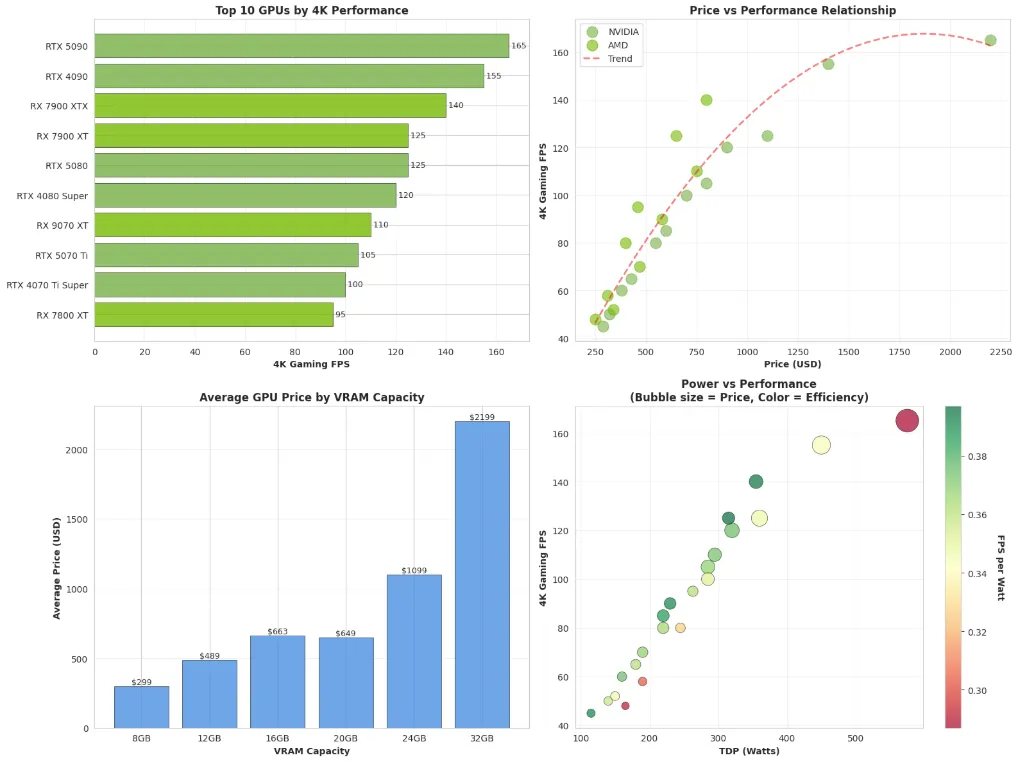

This is where the analysis got really interesting. The 4K gaming premium tier goes to the RTX 5090 at 165 FPS average, which is frankly overkill for most scenarios. But when you’re pushing a high-refresh 4K display or you absolutely want every graphical bell and whistle enabled, it delivers.

The value proposition, though? That belongs to AMD’s RX 7800 XT at $459. Hear me out, 95 FPS at 4K, 16GB of VRAM, and you’re paying about a quarter of what the 5090 costs. The value metric is 0.207 FPS per dollar. For context, that’s nearly three times more efficient than the premium option.

And you know what’s wild? The budget recommendation is also the RX 7800 XT. Same card. Because at $459, it legitimately serves both roles. Use upscaling tech like FSR or DLSS (depending on titles), maybe dial back a few settings from Ultra to High, and you’ve got smooth 4K gaming for less than the cost of a decent gaming monitor.

The 1440p segment tells a similar story. The RX 7800 XT hits 150 FPS at this resolution with a value metric of 0.327 FPS per dollar. The premium option (RTX 5090) gives you 240 FPS, which is amazing for competitive esports but represents significant overspending for most users.

Content Creation: The VRAM Wars

3D artists and content creators face a different calculus. This is where VRAM capacity becomes non-negotiable. Try working in Blender on a complex architectural scene with 8GB of VRAM, you’ll be optimizing and pruning constantly just to keep the viewport responsive.

The RTX 5090’s 32GB makes sense here. When you’re working on client projects with tight deadlines, viewport lag and render crashes cost you real money. That 1,792 GB/s memory bandwidth means your scenes load faster, your edits feel responsive, and you’re not sitting around waiting for the hardware to catch up with your creativity.

But again, the RTX 4090 at $1,399 with 24GB tells a different story. For freelancers and small studios, that’s still professional-grade capability at a $800 discount. Most projects don’t actually push past 24GB until you’re getting into feature film VFX territory.

The budget option here, RX 7900 XT at $649 with 20GB—represents entry-level professional work. It’s adequate for learning industry tools, building portfolios, and handling moderate complexity projects. But you’ll feel the constraints faster than in other use cases.

The AI Startup That Saved $12,000

Another case: a small AI research company spinning out of a university. They had funding for hardware but needed to maximize their runway. Initial plan? Four RTX 5090s for the team.

The analysis made them reconsider. They bought two 5090s for the lead researchers working on large model training, and four 4090s for the rest of the team doing fine-tuning and experimentation. Same total GPU count (six instead of four), broader team capability, and $3,600 in savings.

Six months later, they told us this was the right call. The junior researchers rarely maxed out the 4090s, and having more GPUs available meant less time queuing for resources.

The Methodology’s Broader Implications

Audiences Segmentation

Most tech purchasing advice tries to be universal. “Best GPU of 2025!” But best for whom? Best at what? By starting with distinct user profiles and their actual workflows, we created recommendations that feel personal even though they’re data-driven.

This matters for business decision-makers especially. When you’re making bulk purchases or setting hardware standards for a team, understanding the usage patterns helps you avoid both under-provisioning (which kills productivity) and over-provisioning (which wastes budget).

The Value Tier System

The three-tier approach (Premium/Value/Budget) acknowledges something important: different situations warrant different spending strategies. A freelancer bootstrapping their business has different constraints than an established studio. A hobbyist learning machine learning has different needs than a research scientist.

By explicitly calculating performance per dollar and showing the actual performance deltas, buyers can make informed tradeoffs. Maybe you don’t need the absolute best—maybe you need “80% of the performance at 50% of the cost” and that’s perfectly rational.

What This Means for Future Hardware Decisions

You can access the notebook here.

You can access the notebook here.

The GPU market in 2025 is frankly more complex than it’s ever been. We’ve got AI workloads driving demand for massive VRAM. Gaming pushing 4K and ray tracing mainstream. Content creation tools becoming more demanding. And pricing that reflects both increased capability and market dynamics.

This analysis framework provides a template for navigating this complexity, not just for GPUs, but for any technical purchasing decision. Start with understanding your actual use case. Identify the metrics that matter for your workflow. Calculate real value, not just raw performance. Consider the full spectrum from budget to premium and honestly assess where on that spectrum your needs fall.

The Power Consumption Question

One thing we noted but didn’t stress enough initially: power draw matters. The RTX 5090 pulls 575W under load. That’s not trivial. You need an 850W+ PSU, robust cooling, and you’ll see it on your power bill if you’re running intensive workloads regularly.

The RX 7800 XT, by contrast, draws 263W. For office environments with multiple workstations, that delta adds up. It affects cooling requirements, power infrastructure, and operating costs. Sometimes the “budget” option saves you money in multiple ways.

Future-Proofing vs. Right-Sizing

There’s this temptation to “future-proof” hardware purchases, buy more than you need now on the theory that requirements will grow. Sometimes that makes sense. For content creators whose project complexity typically increases over time, that extra VRAM headroom can extend a GPU’s useful life.

But honestly? For many use cases, buying appropriate hardware now and planning for a replacement cycle makes more financial sense than overshooting requirements by 50%. Technology moves fast. That premium GPU you bought for future-proofing might be outclassed by next year’s mid-range options anyway.

Key Takeaways From The Analysis

Here’s what this analysis demonstrates:

Segment your users carefully.

Not everyone needs the same hardware. A mixed approach, premium GPUs where they matter most, value options where they’re sufficient often optimizes both performance and budget better than standardizing on one tier.

Value isn’t about being cheap.

The “best value” recommendations often represent the sweet spot where performance is still excellent but you’re not paying exponentially more for diminishing returns. An 85% solution at 60% of the cost is often the smart play.

Consider total cost of ownership.

Purchase price, power consumption, cooling requirements, and realistic replacement cycles all factor into actual costs. That $459 GPU might cost you another $100 in PSU upgrades; factor it in.

Real-world performance beats theoretical specs.

Marketing materials will throw impressive numbers at you. Ignore them. Look for actual performance benchmarks in workflows similar to yours. FPS in representative games. Training time for models similar to what you’ll run. Render times in your actual software.

Budget constraints are legitimate.

There’s sometimes this attitude that if you can’t afford premium hardware, you’re not serious. That’s nonsense. Many professionals do excellent work on mid-range hardware. The budget recommendations in this analysis aren’t compromises, they’re legitimate tools for getting work done within realistic constraints.

Final Thoughts

You can access the notebook here.

You can access the notebook here.

This GPU market analysis for 2025 represents more than just a buying guide. It’s a methodology for making technical purchasing decisions in an increasingly complex market. By starting with user profiles, identifying what actually matters for each use case, and providing tiered recommendations based on both performance and value, it helps buyers cut through the noise.

The specific GPU recommendations will evolve—new cards will launch, prices will shift, performance will improve. But the approach? That stays relevant. And in a tech landscape where hardware decisions can represent significant investments, having a structured way to evaluate options is worth more than any specific product recommendation.

To make your own analysis like this? Use Livedocs.

- 8x speed response

- Ask agent to find datasets for you

- Set system rules for agent

- Collaborate

- And more

Get started with Livedocs and build your first live notebook in minutes.

- 💬 If you have questions or feedback, please email directly at a[at]livedocs[dot]com

- 📣 Take Livedocs for a spin over at livedocs.com/start. Livedocs has a great free plan, with $10 per month of LLM usage on every plan

- 🤝 Say hello to the team on X and LinkedIn

Stay tuned for the next tutorial!

Ready to analyze your data?

Upload your CSV, spreadsheet, or connect to a database. Get charts, metrics, and clear explanations in minutes.

No signup required — start analyzing instantly