From Data to Stakeholder Buy-In

Here’s the thing about being a data scientist or ML engineer, you can build the most elegant model in the world, but it’s a waste if your stakeholders don’t understand what it means.

Your business executives just want to know: “Will this make us money?” instead of trying to understand what’s the F1 scores and AUC metrics.

—

Why Traditional ML Metrics Fall Flat with Business Folks

Telling a retail executive that your recommendation engine has an 87% accuracy rate means nothing if you can’t connect that to increased basket sizes or customer lifetime value.

We spend years learning about precision, recall, and log loss, then wonder why our CFO’s eyes glaze over during presentations. The problem isn’t the metrics themselves, they’re crucial for building good models. The disconnect happens when we forget to translate technical performance into business impact.

Most stakeholders care about three things, and three things only: revenue growth, cost reduction, and risk mitigation. Everything else is background noise. And your job is to build a bridge between your model’s performance and these core concerns.

—

The Metrics That Actually Matter to Stakeholders

When you’re presenting to business leaders, here are the metrics that’ll actually resonate:

Revenue Impact Metrics

- Customer Lifetime Value (CLV) lift: How much more is each customer worth after your model intervenes?

- Conversion rate improvement: If your model increases conversions by 2%, what does that mean in actual dollars?

- Average order value (AOV) increase: Even small percentage gains here can translate to massive revenue bumps

- Churn reduction value: Calculate the retained revenue from customers who didn’t leave because your model caught them in time

Operational Efficiency Metrics

- Time saved per transaction or process: Minutes matter when you’re talking about thousands of daily operations.

- Cost per prediction vs. manual cost: Show the math on automation savings

- Error reduction rates translated to dollars: Each mistake costs money, quantify the savings from fewer errors

- Resource reallocation value: If your model frees up 3 employees, what can those employees now focus on?

Risk and Compliance Metrics

- False positive/negative costs: A false positive in fraud detection might annoy a customer; a false negative could cost millions

- Regulatory compliance improvements: Quantify the cost of non-compliance that your model helps avoid

- Brand reputation protection value: Some things are harder to quantify, but try anyway.

The trick is you need to do the homework upfront. Before building anything, sit down with stakeholders and understand their KPIs. What keeps them up at night? What metrics do they report to their bosses? Then design your evaluation framework to speak directly to those concerns.

—

Building Custom Metrics That Bridge Both Worlds

Here’s how you might structure a custom business-oriented metric:

Let’s say you’re building a predictive maintenance model for a manufacturing company. Sure, you could report your model’s RMSE for predicting equipment failure times. But here’s what stakeholders really care about: unplanned downtime costs them $100,000 per hour. Your model reduces unplanned downtime by 30%. That’s the metric that matters.

Business Value Score = (True Positives × Revenue per Correct Prediction) - (False Positives × Cost per False Alarm) - (False Negatives × Cost per Miss)

See what happened there? We took standard classification outcomes and weighted them by actual business costs. Suddenly your confusion matrix becomes a profit matrix. Your stakeholders get it immediately because you’re speaking their language.

Another approach I’ve seen work well is creating tiered metrics, show the technical metrics to satisfy the data-literate folks, but lead with the business metrics. Your slide deck might look like:

- Impact Summary: “This model will save $2.3M annually”

- How We Got There: “By improving prediction accuracy from 72% to 89%…”

- Technical Deep-Dive (in appendix): “Model architecture, hyperparameters, and performance curves”

Most executives will stop at slide 1. The technical team can geek out on slide 3. Everyone’s happy.

—

Where Modern Platforms Like Livedocs Come Into Play

Here’s where things get practical, and honestly, a bit easier. Tracking and reporting these metrics used to mean building entire dashboards from scratch, writing SQL queries, and manually updating reports. It was exhausting.

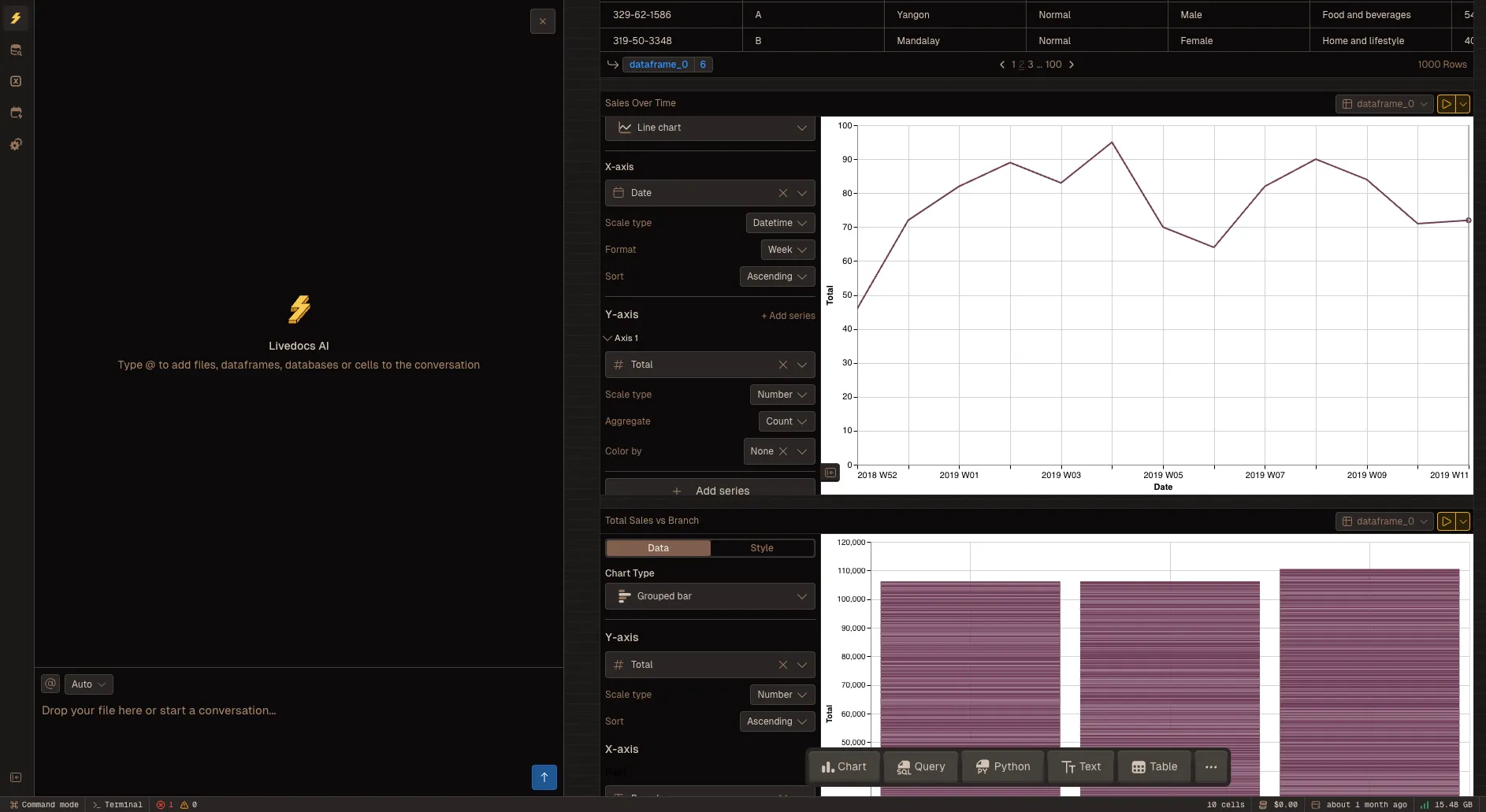

Tools like Livedocs are changing this game by combining notebook style data work with simple app-building capabilities, which is perfect for teams that need to bridge the gap between technical analysis and stakeholder communication. Think about these use cases:

Real-Time Model Performance Dashboards

Imagine you’ve deployed a pricing optimization model. Your stakeholders don’t want to wait for weekly reports, they want to see how it’s performing right now. With platforms that support exploratory data analysis and visualizing trends without complex setup, you can create living documents that pull fresh data automatically.

Your VP of Sales can check this morning’s model performance over coffee, without bugging you for an update.

Instead of teams resorting to VBA scripts or constantly asking data teams for simple metrics, you build it once and it updates itself. That pricing model dashboard shows today’s revenue lift, yesterday’s prediction accuracy, and this week’s customer impact, all refreshed automatically.

Metric Translation Hubs

Here’s something I’ve started doing: creating what I call “translation documents” that sit between raw model outputs and business reports. Using Python and SQL together in a collaborative environment lets you calculate technical metrics in one section, then immediately transform them into business metrics in the next section, all in the same document.

For example, you might run a SQL query to pull your model’s confusion matrix, then use Python to calculate the dollar impact of each cell, then visualize it in a chart your CFO actually understands. No context-switching between tools, no copy-pasting numbers into spreadsheets. Just a clean, automated flow from technical metrics to business insights.

Scenario Planning and “What-If” Analysis

Stakeholders love asking “what if” questions.

What if we improve precision by 5%? What if we deploy to a new market? What if we double our training data? Platforms built for scenario planning and risk assessment let you build interactive tools where stakeholders can adjust parameters and immediately see business impact.

This is powerful because it shifts you from being the “report generator” to being the “insights enabler.” Your stakeholders can explore trade-offs themselves, which builds intuition and trust in your models.

Plus, you’re not stuck updating the same analysis 47 times with slightly different assumptions.

Collaborative Metric Development

Your stakeholders know the business context; you know what’s technically feasible. The intersection of that knowledge is where great metrics live.

When you can set up documents to automatically send to Slack channels or email daily, suddenly you’ve created a feedback loop. Stakeholders see the metrics, ask questions, suggest refinements,all without formal meetings. You iterate on the metric definitions together until you land on something that’s both technically sound and business-relevant.

I’ve seen teams use this approach to co-create custom KPIs that neither side would’ve thought of alone. The data scientists understand model behavior; the business folks understand market dynamics. Together they build metrics that capture both dimensions.

Building Metrics Governance Into Your ML Workflow

One thing I’ve learned the hard way: establish your business metrics BEFORE you start building. I know, I know, everyone wants to start coding immediately. But spending a week defining success criteria with stakeholders saves months of frustration later. Create a metrics charter document. Include:

- Primary business metric (the one metric that matters most)

- Secondary business metrics (supporting indicators)

- Technical metrics you’ll track internally

- How business metrics map to technical metrics

- Thresholds for success, failure, and “needs improvement”

- Monitoring frequency and reporting cadence

When you need to prioritize debugging efforts, the charter tells you what matters most.

Final Thoughts

Start with the business problem. Define success in business terms. Build technical solutions that achieve those business goals. Measure and report in language everyone understands. Iterate based on real-world impact, not just validation metrics.

The best, fastest agentic notebook 2026? Livedocs.

- 8x speed response

- Ask agent to find datasets for you

- Set system rules for agent

- Collaborate

- And more

Get started with Livedocs and build your first live notebook in minutes.

—

- 💬 If you have questions or feedback, please email directly at a[at]livedocs[dot]com

- 📣 Take Livedocs for a spin over at livedocs.com/start. Livedocs has a great free plan, with $10 per month of LLM usage on every plan

- 🤝 Say hello to the team on X and LinkedIn

Stay tuned for the next tutorial!

Ready to analyze your data?

Upload your CSV, spreadsheet, or connect to a database. Get charts, metrics, and clear explanations in minutes.

No signup required — start analyzing instantly