Cybersecurity Must Evolve With Low Code Tool

We’re living through a pretty remarkable shift in how people interact with data. Not long ago, if you wanted to build an AI-powered data analysis workflow, you needed a team of data scientists, access to expensive infrastructure, and weeks of development time.

Now? Platforms like Livedocs let analysts spin up AI agents that can query databases, generate visualizations, and deliver insights in minutes, all without writing complex code.

This democratization is genuinely exciting. I’ve watched security teams go from waiting weeks for custom threat analysis dashboards to building their own interactive data workflows in an afternoon.

The barrier between “I have a question about our data” and “here’s an AI agent analyzing that data” has essentially disappeared.

But here’s the thing. As someone who’s spent years in cybersecurity, I can’t help but think about what this shift means for how we protect these systems. When powerful data analysis capabilities become this accessible, security can’t be an afterthought. The good news? We have an opportunity to build security into AI workflows from day one, not bolt it on later when something goes wrong.

Why Collaborative AI Platforms Need a Different Security Approach

Traditional data analysis security was relatively straightforward. Your data lived in a database behind a firewall. Your analysts used approved BI tools. Everything was centralized and controlled. Security teams could monitor who accessed what, when, and how.

Platforms like Livedocs represent a fundamentally different model. They’re designed to be collaborative workspaces where teams can connect to multiple data sources, share notebooks with colleagues, build AI agents that execute code, and publish results for broader audiences. The whole point is flexibility and speed.

This doesn’t make them inherently less secure. It just means security needs to work differently. Instead of controlling access at a single database layer, you’re managing permissions across workspaces, documents, data sources, and AI agents. Instead of locking down who can run queries, you’re enabling people to experiment freely while ensuring they can’t accidentally expose sensitive data.Think of it this way. In the old model, security was about building walls and controlling the gates.

In collaborative AI platforms, security needs to be more distributed. It’s embedded in how data sources connect, how secrets are managed, how workspaces are organized, and how publishing controls work.

The Real Security Challenges (And What To Do About Them)

Let me walk you through the main security considerations that actually matter when you’re working with platforms like Livedocs. These aren’t theoretical concerns. They’re the real issues I see teams wrestling with as they adopt these tools.

Managing Data Access in a Collaborative Environment

Here’s a scenario that happens all the time. An analyst connects a PostgreSQL database to Livedocs to build a quick dashboard analyzing customer behavior. They create a notebook, add some Python code to process the data, generate visualizations, and share it with their team for feedback. Suddenly, that customer database is more accessible than anyone intended.

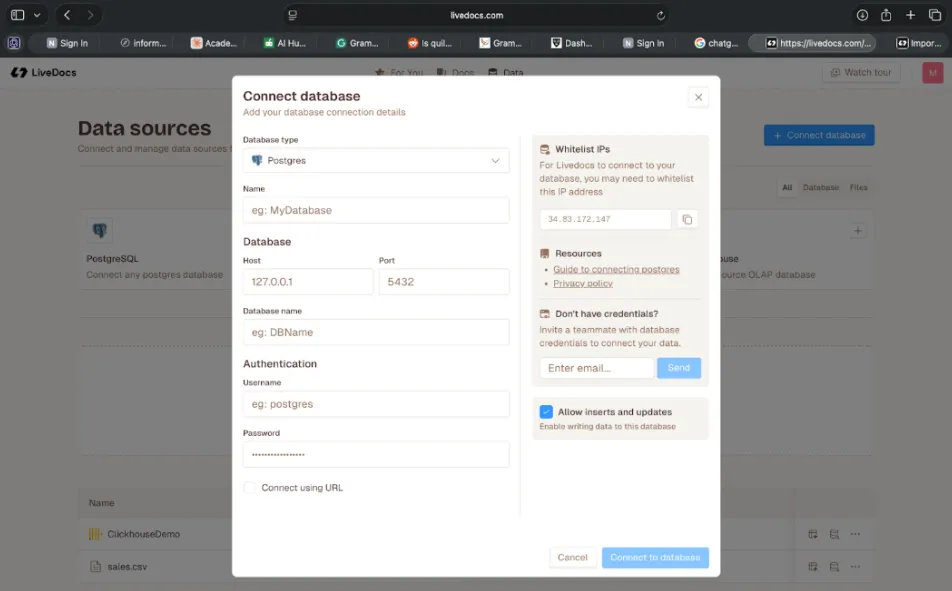

The risk isn’t malicious. It’s accidental exposure through collaboration. Good platforms handle this by treating data sources as first-class security boundaries. In Livedocs, when you connect a database or upload a file, that connection lives at the workspace level. You’re not just connecting your personal account to a database. You’re creating a managed data source that the workspace administrators can control.

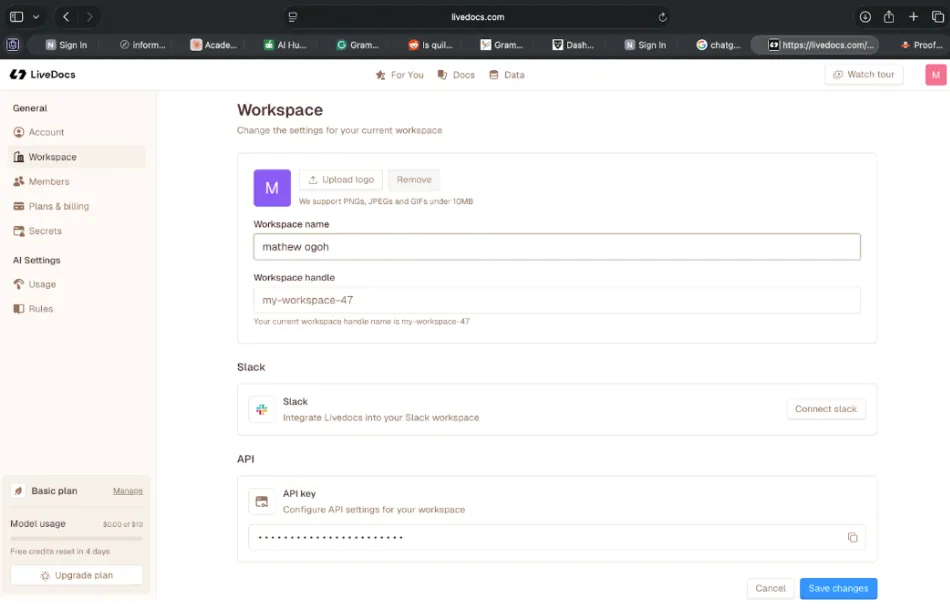

Figure 1: Livedocs’ centralized database connection interface ensures credentials are managed at the workspace level, not by individual users. This prevents credential sprawl and maintains consistent access control.

This is actually pretty clever from a security perspective. It means your junior analysts can use the data source in their notebooks without needing database credentials. They authenticate to Livedocs, and the platform handles database authentication based on their workspace permissions. When someone leaves the team, you don’t need to hunt down everywhere they embedded credentials. You just remove them from the workspace.

Some practical approaches that work well here are to classify your data sources before connecting them. Not everything needs the same level of protection. Public datasets are different from customer PII. Internal metrics are different from financial data. Before you connect a data source to Livedocs, decide what sensitivity level it is and who should be able to use it in their analyses.

Use workspace separation strategically. Livedocs lets you create multiple workspaces, and for good reason. Don’t put your production customer data and your experimental datasets in the same workspace. Create a workspace for sensitive data analysis with tighter controls and a separate workspace for general analytics where people can experiment freely. Monitor who’s actually using what data sources. The platform should give you visibility into which documents are connected to which databases, which users are querying them, and when. That audit trail becomes crucial when someone asks “who has access to our customer data?”

The goal isn’t to make data inaccessible. It’s to make sure the right people have access to the right data at the right time, and that sharing happens intentionally rather than accidentally.

Access Control That Enables Rather Than Blocks

I’ve seen too many organizations implement access controls that are technically secure but practically useless because everyone immediately works around them. That’s not security. That’s security theater that makes actual security worse.

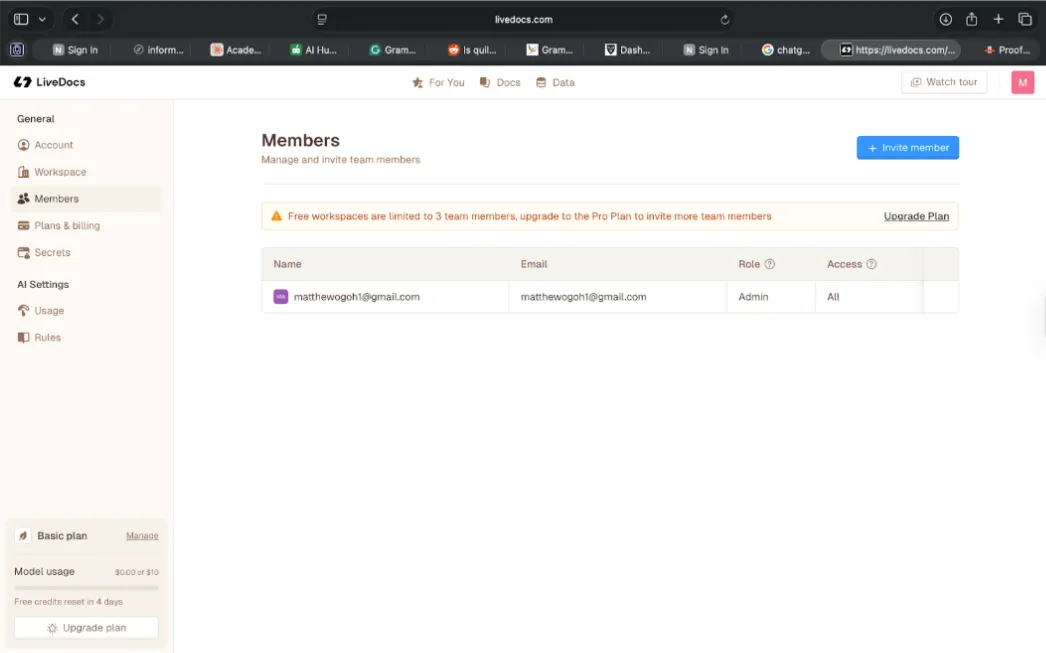

Livedocs takes a reasonably pragmatic approach here with role-based access at multiple levels. At the workspace level, you’ve got Admins who can manage everything, Editors who can create and modify documents, and Viewers who can only see published content. Within documents, you can control who can collaborate in real time versus who just sees the final results.

Figure 2: Workspace member management in Livedocs showing role-based access control. The Admin role provides full workspace control, while Editors and Viewers have progressively limited permissions

This matters more than it might seem. When you’re building a data analysis workflow, you want your team to be able to iterate quickly. Multiple people should be able to work on the same notebook simultaneously, adding Python cells, trying different visualizations, debugging SQL queries. That’s where the real value comes from.

But you don’t want that same workflow to accidentally get shared with your entire company before it’s ready. That’s where the publishing model becomes a security feature. In Livedocs, documents exist in two states. There’s the working version where your team collaborates, and there’s the published version that others can access. Until you explicitly publish, nobody outside your collaboration group can see what you’re building, even if they somehow get a link.

Here’s what I recommend based on what actually works. Start with the principle of least privilege but make it contextual. Your analysts should be Editors in their team’s workspace so they can build and share freely. But they should be Viewers in other teams’ workspaces where they just need to see results, not modify workflows.

Use the Admin role sparingly. Admins can connect new data sources, manage workspace members, and control settings that affect everyone. That level of access should go to people who understand the security implications. In a security team using Livedocs, maybe the team lead is an Admin while everyone else is an Editor.

Separate your development and production environments at the workspace level. Have a workspace where your team experiments with new analyses, tries risky queries, and tests AI agents against sample data. Once something works and is validated, move it to your production workspace where it runs against real data and gets published to stakeholders.

Think carefully about what you publish and to whom. Just because you can make a document public doesn’t mean you should. Use Livedocs’ sharing controls to give specific people access to specific documents based on what they actually need to see.

Keeping Credentials Secure

This is where a lot of teams accidentally create security problems. Data analysis workflows need to connect to databases, APIs, cloud storage, and various services. Each connection needs credentials. If analysts are embedding API keys directly in their Python code or hardcoding database passwords in SQL cells, you’ve got a recipe for credential leakage.

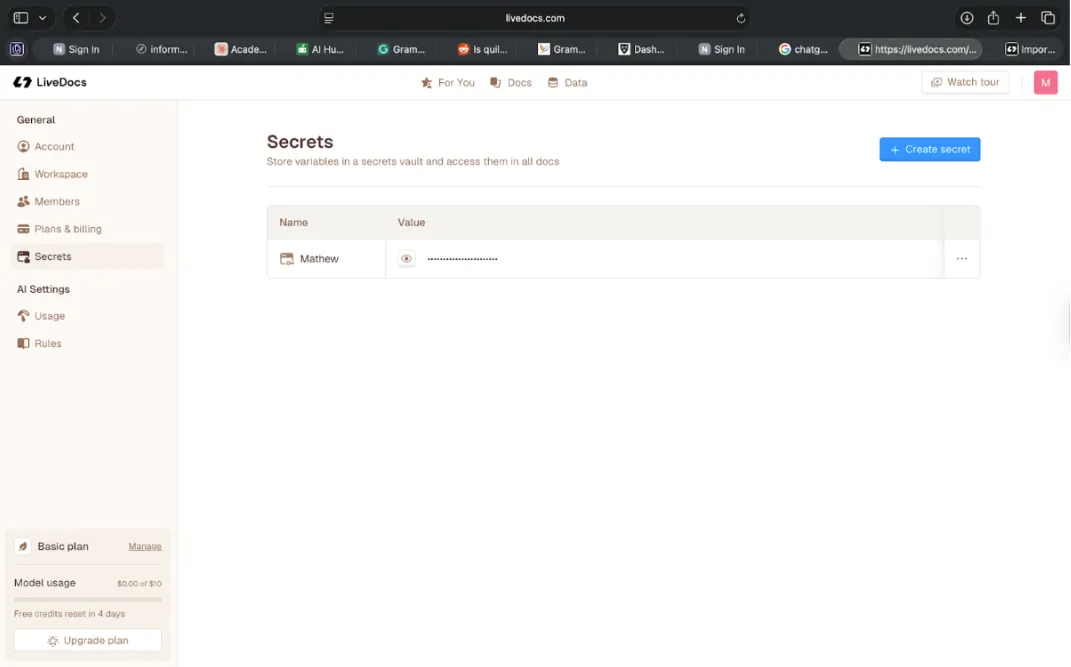

Livedocs handles this through a dedicated Secrets tab that’s designed specifically for managing sensitive credentials. You store your API keys, database passwords, and other secrets there, and then reference them in your code without ever exposing the actual values.

Figure 3: The Secrets tab in Livedocs provides a secure vault for storing API keys, database credentials, and other sensitive information. Values are masked and can be referenced in code without exposing them.

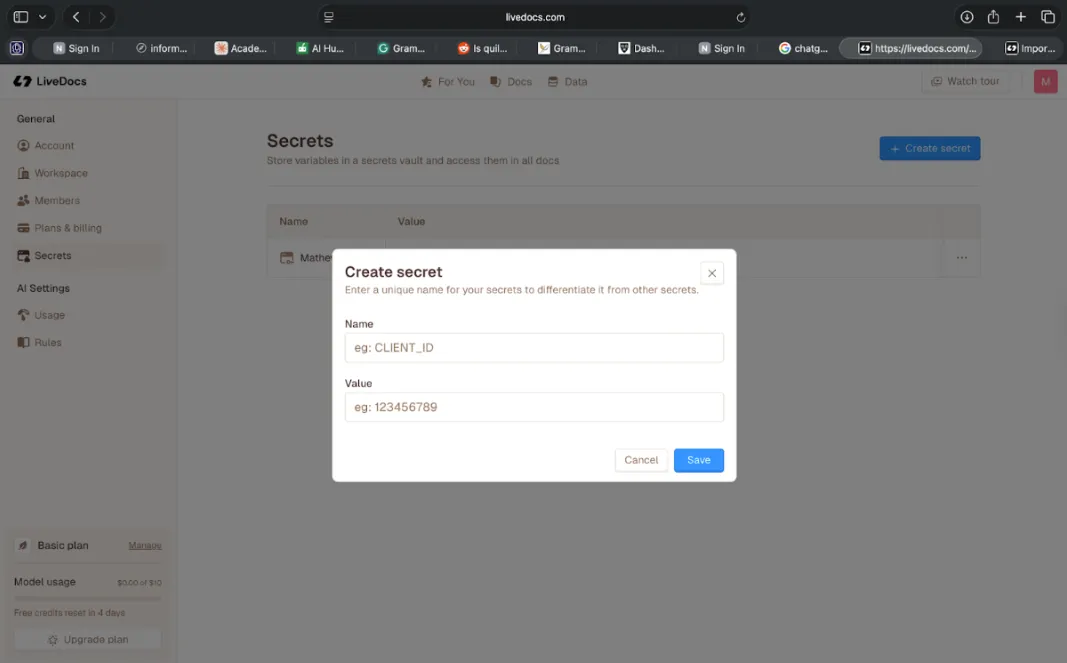

Figure 4: Creating a new secret in Livedocs. The platform ensures credentials are stored securely and never appear in notebook code, preventing accidental exposure through sharing or version control.

The security benefit here is centralization. Instead of credentials scattered across dozens of notebooks in different people’s personal projects, they’re managed in one place. When a credential needs to be rotated, you update it in the Secrets tab and every notebook using that secret automatically gets the new value. No hunting through code to find where old credentials are hardcoded.

When someone leaves your organization, you don’t need to wonder which credentials they might have copied from notebooks. Because they never had access to the actual credential values, just the ability to use them through the platform.

Here’s what this looks like in practice. Say you’re connecting to a third-party threat intelligence API. Instead of putting your API key directly in Python code like api_key = "sk_live_abc123...", you store it in Secrets with a name like threat_intel_api_key. Then in your code, you reference it through Livedocs’ secrets API. The key never appears in your notebook. Anyone who can view or edit that notebook still can’t see the actual API key.

Some practical guidelines that prevent most credential problems are to never put credentials directly in code. Ever. If you see actual API keys, passwords, or tokens in a notebook, that’s a security incident waiting to happen. Use the Secrets tab for everything sensitive.

Treat secrets with the same sensitivity as the systems they protect. If an API key gives access to customer data, it should be managed as carefully as that customer database itself. Don’t share secrets more broadly than necessary. Just because someone needs to work with a particular API doesn’t mean they need to create or modify the secret for it.

Rotate credentials regularly and audit secret usage. Livedocs should give you visibility into which secrets exist and which documents are using them. Use that to understand your credential footprint and identify secrets that might need rotation or retirement.

Securing the AI Agents Themselves

Here’s something that doesn’t get discussed enough. When you’re building AI agents in platforms like Livedocs, you’re essentially creating autonomous code that can query databases, call APIs, process data, and make decisions. How do you ensure those agents are doing what they’re supposed to?

The answer starts with transparency and audit trails. Every Livedocs document has a complete history. You can see who created it, who modified it, what data sources it connects to, and what code it runs. If an AI agent starts behaving strangely or producing unexpected results, you can trace back through its history to understand what changed.

This is one reason why the publishing model matters for security. When you’re iterating on an AI workflow, you’re making changes frequently. Some of those changes might break things or produce incorrect results. By keeping the working version separate from the published version, you create a safety barrier. Your experimental changes don’t immediately affect everyone who relies on that analysis.

For security-critical workflows, I recommend implementing a review process before publishing. In Livedocs, this could be as simple as having a team lead review notebooks before they get published to broader audiences. Look at what data sources are being used, what the code is actually doing, and whether the outputs make sense.

Monitor your AI agents for unusual behavior. If a notebook that normally queries a specific table suddenly starts accessing different data, that’s worth investigating. If an analysis that usually completes in seconds starts taking minutes, something changed. These anomalies don’t necessarily mean something is wrong, but they warrant a look.

Keep versions of important analyses. Livedocs documents exist in a workspace, and that workspace should have backups. If you need to roll back an AI agent to a previous version because something went wrong, you should be able to. This is basic operational security, but it’s surprising how many teams don’t think about it until they need it.

Managing External Connections

Low-code AI platforms are powerful precisely because they connect to everything. Databases, APIs, file storage, SaaS applications. In Livedocs, you might have notebooks that pull data from PostgreSQL, fetch additional context from a REST API, upload results to cloud storage, and send notifications to Slack. Each connection is a potential security boundary.

The centralized data source management in Livedocs actually helps a lot here. Instead of every analyst maintaining their own connections with their own credentials, data sources are workspace-level resources. When you connect a PostgreSQL database, you do it once at the workspace level. Everyone who needs to use that database in their analyses references the same connection.

This gives you visibility and control. You can see every external system your team is connecting to. You can manage the credentials for those connections centrally. You can monitor who’s actually using each data source and for what purpose. When something goes wrong with an external connection, you have a single place to fix it.

The encryption side is handled by the platform. Connections to external databases and APIs should use SSL/TLS. Data at rest should be encrypted. These aren’t things individual users need to worry about. The platform handles them, and security teams can verify the configuration once rather than auditing every individual connection.

What you do need to think about is which external systems should be accessible from your data analysis environment. Just because you can connect Livedocs to a particular database doesn’t mean you should. Some data is so sensitive that it shouldn’t be in a collaborative analysis platform at all, even with good access controls.

Create clear policies about which data sources can be connected and by whom. Maybe anyone on the data team can upload CSV files or connect to your data warehouse, but connecting to production databases requires approval from a team lead. These policies should match your organization’s risk tolerance and the sensitivity of different data assets.

Compliance That Doesn’t Slow Everything Down

If you’re working with customer data in Europe, GDPR applies. Healthcare data means HIPAA. Financial services brings SOX and other regulations. The compliance landscape is complex and getting more so.

The traditional approach was building compliance into individual applications after they were built. This was expensive, time-consuming, and error-prone. With platforms like Livedocs, you can build compliance controls at the platform level and have them apply consistently across all analyses.

Data retention is a good example. Instead of trying to implement retention policies in every individual notebook, you handle retention at the data source level. When customer data flows into Livedocs from your database, the platform can enforce retention rules automatically. Old data gets removed on schedule without requiring every analyst to remember to do it.

Audit logging is similar. Livedocs tracks who accessed what data when. This audit trail serves multiple purposes. It helps with security monitoring, catching unusual access patterns that might indicate a problem. It helps with compliance, demonstrating to auditors that you have visibility and control over data access. And it helps with operational troubleshooting when someone needs to understand how a particular analysis was built.

The publish and share controls become compliance tools. When you need to demonstrate that customer PII is only accessible to authorized personnel, you can point to workspace permissions and document sharing settings. The platform enforces access control. You don’t have to trust that every analyst remembers the compliance rules.

For teams subject to strict regulations, consider having separate workspaces for regulated data. Your HIPAA-covered healthcare data might live in a dedicated workspace with enhanced access controls, detailed audit logging, and restricted data connections. Your general business analytics can use a different workspace with more flexibility. The platform lets you apply different security postures to different use cases.

Building Security Into Your Workflows: What Actually Works

Enough theory. Let’s talk about what this looks like in practice when you’re actually using Livedocs day to day.

Start with the Right Workspace Structure

Before anyone creates their first notebook, think about how you’ll organize workspaces. This decision shapes everything else about your security posture. In Livedocs, workspaces are the primary security boundary. People in a workspace can see and use the data sources in that workspace. They can collaborate on documents in that workspace. Workspace settings and permissions apply to everyone in the workspace.

Figure 5: Workspace configuration in Livedocs showing workspace naming, API settings, and integration options. Proper workspace setup is the foundation of security in collaborative data analysis.

Most teams need at least two workspaces to start. One for production analyses that stakeholders rely on, with tighter controls and only validated data sources. Another for experimental work where analysts can safely break things without affecting anyone else. As your usage grows, you might add workspaces for different teams, different security contexts, or different data sensitivity levels.

When setting up a new workspace, start by defining who should be in it and at what permission level. Not everyone needs to be an Editor. Stakeholders who just need to see results should be Viewers. People who need to build and modify analyses should be Editors. Limit the Admin role to people responsible for managing the workspace and its security.

Connect your data sources thoughtfully. Just because a database is available doesn’t mean it should be connected to every workspace. Connect only the data sources actually needed for the work happening in that workspace. This limits the blast radius if something goes wrong.

Make Security Visible But Not Annoying

Users should understand when they’re working with sensitive data, but security shouldn’t constantly interrupt their flow. Good platforms show security context naturally. In Livedocs, this comes through the workspace you’re in, the data sources available, and the publishing state of documents you’re working on.

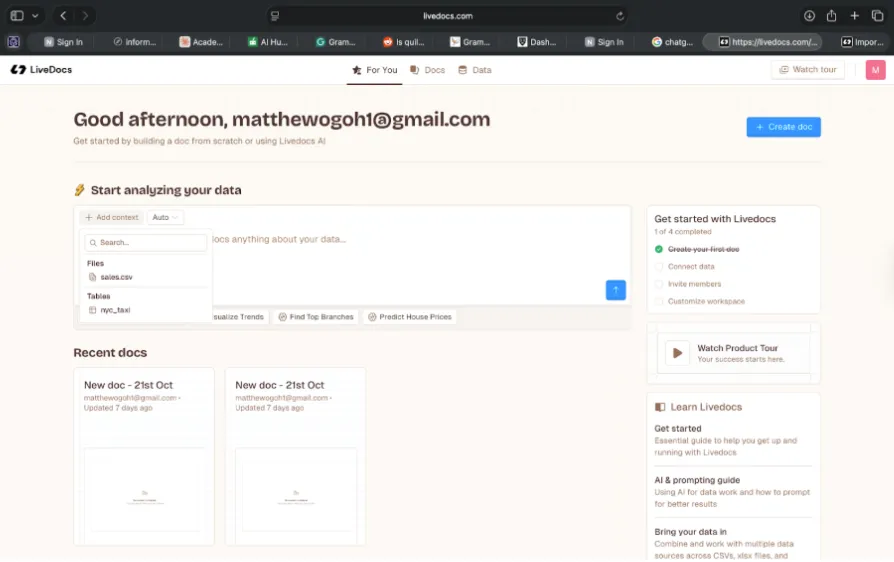

The Livedocs workspace interface showing the “For You” personalized view, recent documents, and available data sources. The clean interface makes it easy to understand your current context and available resources.

Train your teams on what these indicators mean. When someone is working in the production workspace versus the experimental workspace, they should know the difference in expectations. When they’re about to publish a document that will be visible outside their immediate team, they should understand the implications.

Security awareness works best when it’s contextual and just-in-time. Instead of annual training that people forget, teach security concepts when they’re relevant. When someone is about to connect a new database, that’s the right time to discuss data classification. When they’re publishing their first document, that’s the right time to talk about sharing controls and data exposure.

Implement Progressive Security Based on Sensitivity

Not everything needs maximum security controls. A notebook analyzing public website traffic is different from one processing customer PII. A workspace for marketing analytics is different from one for financial reporting. Apply security controls proportional to the sensitivity and risk.

For low-sensitivity work, focus on preventing accidents rather than enforcing strict controls. Basic workspace permissions, straightforward data source access, and simple publishing controls are probably sufficient. The goal is to let people work efficiently while avoiding obvious mistakes.

For medium-sensitivity work, add more structure. Review who has access to the workspace and why. Be more careful about which data sources are connected and who can use them. Consider having a review step before publishing documents to broader audiences. Use the Secrets tab for all credentials rather than letting anything slip into code.

For high-sensitivity work, bring out the full security toolkit. Dedicated workspace with minimal membership. Strict controls on data source connections. Mandatory review before publishing anything. Detailed audit logging. Regular access reviews to ensure only people who currently need access have it. Consider using separate infrastructure if the platform supports it.

Monitor Actively Rather Than Reactively

Logs are only useful if someone actually looks at them. Set up monitoring for security-relevant events in your Livedocs usage. New data sources being connected to workspaces. Documents being published that access sensitive data. Users with admin privileges making configuration changes. Unusual query patterns that might indicate data exfiltration.

The goal isn’t to catch bad actors, though that’s a nice benefit. The goal is to understand how your team is actually using the platform and whether that usage matches your security expectations. Maybe you discover that people are sharing more documents externally than you realized. Maybe a data source you thought was low-use is actually critical to multiple teams. This understanding lets you make informed decisions about security controls.

In Livedocs, you can actually build monitoring dashboards using the same tools you’re monitoring. Create a workspace for security analytics. Connect it to your platform’s audit logs if available, or use the platform’s built-in reporting. Build notebooks that analyze usage patterns, track access to sensitive data sources, and flag anomalies. Use AI agents to help identify unusual behavior.

Test Your Security Regularly

Security isn’t something you set up once and forget about. Run regular exercises to verify your controls work as intended. Can users access data they shouldn’t? Do publishing controls actually prevent unauthorized viewing? Can you trace data lineage through complex analyses? What happens if credentials get compromised? How quickly can you revoke access in an emergency?

These tests don’t need to be elaborate. Once a quarter, have someone outside the immediate team try to access resources they shouldn’t have access to. Try sharing a document that contains sensitive data and verify who can actually see it. Attempt to use a database connection from outside the authorized workspace and confirm it fails.

The audit trails in Livedocs make these tests easier. You can review what happened, who did it, and whether your security controls behaved correctly. If you find gaps, fix them before they become real problems.

Plan for Incidents Before They Happen

Something will eventually go wrong. Maybe credentials leak. Maybe an AI agent gets misconfigured and produces incorrect results that get published. Maybe someone accidentally shares a document containing sensitive data. Having an incident response plan specific to your Livedocs usage makes a huge difference.

Document how to quickly disable a compromised data source connection. If a database credential is exposed, you need to be able to disconnect it from Livedocs immediately while you rotate the credential. Know where to look to understand the scope of an incident. If a document containing PII was accidentally made public, you need to know who accessed it and when.

Identify who needs to be notified when different types of incidents occur. A minor misconfiguration might just need the team lead. A data breach requires legal, security, compliance, and possibly customers. Having communication templates ready means you can respond faster and more consistently.

Know how to restore from backups if needed. If critical analyses get corrupted or deleted, you need a way to recover them. If an AI agent needs to be rolled back to a previous version, you should be able to do it quickly.

Run drills. Actually practice your incident response. This doesn’t need to be a huge production. Once or twice a year, simulate something going wrong and walk through your response process. You’ll discover gaps in your procedures and areas where documentation needs improvement. Much better to find these during a drill than during a real incident.

The Real Competitive Advantage of Getting Security Right

Here’s something I’ve learned after years in cybersecurity. Security doesn’t slow innovation down. Bad security slows innovation down.

When people are afraid their data analysis workflows might expose sensitive data or violate compliance requirements, they hesitate. They wait for approvals that never come. They build shadow IT solutions that are even less secure. Or they just don’t use powerful tools like Livedocs at all, even though those tools could dramatically improve their work. Good security does the opposite. When your team knows the platform won’t let them accidentally do something dangerous, they move faster. They experiment more freely. They try new analyses and build new AI agents with confidence because the security guardrails are built in.

This is the real competitive advantage of platforms like Livedocs when used with proper security. The combination of powerful AI capabilities, collaborative workflows, and built-in security controls means your team can do things that would be too slow or too risky with traditional approaches.

While your competitors are still debating whether AI data analysis tools are secure enough to adopt, you’re already deploying production workflows that analyze threats faster, derive insights quicker, and make better decisions. That gap compounds over time.

Organizations that figure this out early have a massive advantage. They build security into their data analysis practices from the beginning rather than trying to retrofit it later. They train their teams on how to use collaborative AI platforms securely rather than prohibiting their use entirely. They create environments where security enables speed rather than preventing it.

Moving Forward

The integration of AI into data analysis workflows isn’t slowing down. Platforms like Livedocs are making sophisticated analyses accessible to more people across more industries than ever before. The barrier to entry for powerful data work keeps dropping.

As security professionals, we have a choice about how to respond. We can treat this as a threat, implementing restrictive controls that push innovation into shadow IT where we have even less visibility and control. Or we can treat it as an opportunity to build security that enables innovation.

I’m obviously betting on the second approach. The future of data work is collaborative, AI-powered, and fast-moving. Security needs to be all of those things too. That means building it into platforms rather than layering it on top. It means making security controls that work with how people actually use these tools rather than fighting against their workflows. It means focusing on enabling safe experimentation rather than preventing all risk.

If you’re just starting to explore platforms like Livedocs, think about security from day one. Not because you’re paranoid, but because getting it right from the start means you never have to slow down later. Set up your workspaces thoughtfully. Connect data sources deliberately. Use secrets management for credentials. Apply publishing controls appropriately. Build security into how your team works with the platform rather than treating it as a separate concern. If you’re already using these tools, take a fresh look at your security posture. Are your workspace permissions still appropriate for who’s on your team now? Are data sources classified correctly based on sensitivity? Are credentials managed securely or embedded in code? Can you trace what happens to sensitive data through your analyses? Do your security controls enable your team or frustrate them?

The questions aren’t that different from traditional security. The implementation just looks different when you’re working with collaborative AI platforms.We’re building something new here. Data analysis workflows that are both powerful and secure, accessible and controlled, innovative and compliant. That’s not a contradiction. That’s just good engineering combined with thoughtful security.

The organizations that figure this out will move faster, analyze better, and decide quicker than everyone else. The tools are here. The platforms work. The opportunity is real. Now it’s about building the security foundation that lets teams take full advantage without creating unnecessary risk. Let’s build it right.

About the Author

Mathew Ogoh is a cybersecurity professional specializing in secure implementation of AI and data analysis platforms. With a background in both security operations and data engineering, he helps organizations adopt new technologies while maintaining robust security postures.

Ready to analyze your data?

Upload your CSV, spreadsheet, or connect to a database. Get charts, metrics, and clear explanations in minutes.

No signup required — start analyzing instantly